Also in this section:

ROS Nodes and Launch Files Summary

Setup Networking Between Robot and Workstation

Tutorial: Navigation Using Rviz

Tutorial: Map Making Using Rviz

Tutorial: Drive Using Teleop-Twist-Keyboard

Tutorial: Running the Follower Demo

Tutorial: Gazebo Simulation

Updating the Oculus Prime ROS Packages

Oculus Prime uses the ROS navigation stack for autonomous path planning and simultaneous location and mapping (SLAM). Navigation enhancements are also provided by the Oculusprime Java Server application, which communicates with ROS and the oculusprime_ros package via the telnet API. This page provides general information on how it works.

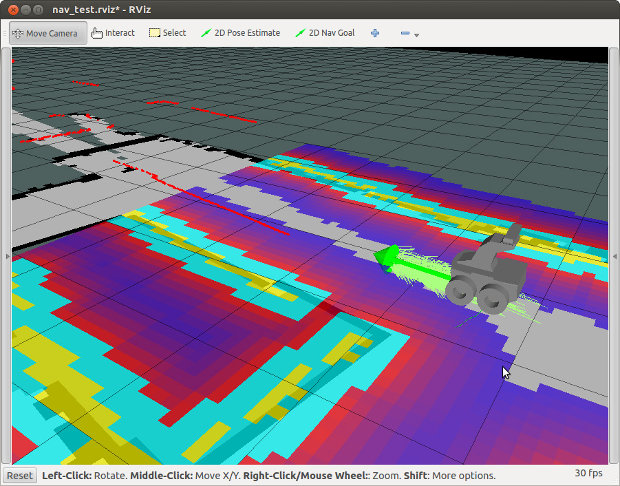

The Oculus Prime Java Server application hides all the underlying ROS complexity and serves up the navigation and mapping functionality within in a convenient web browser GUI. However, navigation can still be manipulated the ‘traditional’ ROS way by running the roslaunch commands from the command line, and viewing the map using Rviz (see Tutorial: Navigation Using Rviz).

Installation

For instructions on installing ROS and required packages, see here.

Startup

When you start navigation using the Oculus Prime web browser interface by going to

'MENU > navigation > start navigation'

you are executing the startnav telnet command. This in turn executes the roslaunch command, which runs the remote_nav.launch file as a roslaunch system command, to get ROS up and running.

The remote_nav ROS node is the top-level overseer and liason between ROS and the Oculusprime Java Server. The launch file also starts the oculusprime_ros nodes odom_tf and arcmove_globalpath_follower, and the rest of the ROS navigation stack.

Odometry

For ROS to be able to localize itself on the map using AMCL, it needs accurate odometry information. The odom_tf node within the oculusprime_ros package continuously reads gyro and encoder data from the Oculus Prime java server and the robot’s MALG PCB, by reading the distanceangle state value every 1/4 second. It then does the appropriate calculations for best-guess location and velocity, then broadcasts the data as a transform and nav_msgs/Odometry message over the ROS messaging system (essentially following this method).

Simulated Laser Scan Data and Floor Plane Scanning

Depth data from the Orbbec or Xtion depth camera is required to help localize Oculus Prime on the map. The system uses a modified version of the depthimage_to_laserscan package, to convert the depth image and simulate a horizontal scan from a laser rangefinder. The modification adds the ability to analyse the floor plane in real-time, and project any obstacles to the horizontal scan. This allows the robot to detect and avoid any obstacles between the horizontal plane of the sensor and the floor, as well as any holes in the floor, or cliffs.

For floor plane scanning to work, the sensor’s angle must be as-close-to-horizontal as possible (if you’re running an Xtion sensor, it helps to keep the angle adjusting screw of the camera cranked up tight). The angle can be tweaked in software as well, by adding the 'horiz_angle_offset' parameter to the fake_laser.launch file ‘depthimage_to_laserscan’ node section, and specifying the camera horizontal angle offset relative to the floor plane, in radians (default is 0).

Floor plane scanning works within a range of about 0.5-3 meters — it is disabled by default during map-making (to save CPU, and because obstacles disappear past the working range, which corrupts the map).

Floor plane scanning can be enabled or disabled during navigation, by changing the 'floorplane_scan_enable' argument in the globalpath_follow.launch file or by running rqt_reconfigure and clicking the corresponding check box.

Path Following

The ROS navigation stack by default publishes velocity commands from the move_base node. These are typically twist messages broadcast to the cmd_vel topic, that specify linear and/or angular velocity. Oculus Prime completely ignores these, since the movement commands coming from the move base node are optimized for differential drive robots, with 2 main drive wheels and one or more passive caster-type wheels, NOT skid-steering robots like Oculus Prime.

The oculusprime_ros node arcmove_globalpath_follower handles the path planning. Instead of responding to cmd_vel twist messages, it interprets the local_plan and global_plan paths being broadcast by move_base, and sends appropriate movement commands to the Oculusprime Java Server.

On smooth floors, it will follow the local_plan by performing corresponding arc moves. Or, if carpet is detected (by slower than expected turn rates), it will follow points along the global_plan path by performing turn-in-place and linear moves sequentially. When it reaches less than 1 meter from the goal, it switches to global_plan path following, as it is more accurate and will be less likely to overshoot the goal.

Mapping

When you start mapping using the Oculus Prime web browser interface by going to

'MENU > navigation > start mapping'

you are executing the startmapping telnet command, which executes the roslaunch command, which ultimately runs roslaunch make_map.launch as a system command, to start up ROS and mapping.

The main oculusprime_ros mapping node map_remote is launched, which acts as the top-level overseer and liason between ROS and the Oculus Prime Java Server. The oculusprime_ros node odom_tf is also launched, as well as the ROS gmapping package.

NEXT: ROS Nodes and Launch Files Summary