Not too long ago, the availability of low cost depth sensors, suitable for mobile robot auto-navigation and SLAM mapping, had become a problem. Apple bought Primesense, and along with it the intellectual property behind the original Microsoft Kinect and Asus Xtion sensors. The excellent Asus Xtion RGBD camera, which was to be the main SLAM sensor for Oculus Prime, was discontinued. The Kinect 1 was still available in quantity, but it was bigger and heavier, and required separate 12V and 5V power.

And it somehow just looks wrong with a Kinect mounted to Oculus Prime:

So, we decided to explore using stereo vision as a possible option. A prototype robot was conjured, sporting two Lifecam Cinema cameras:

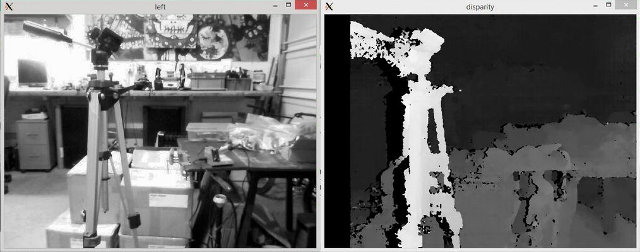

OpenCV’s Semi-Global-Block-Matching (SGBM) algorithm yielded decent looking depth data from combined images. Below left is the left camera view, and on the right is the disparity image generated with the cameras separated by a 60mm baseline – pixel intensity is proportional to distance from camera:

Comparing the depth images from the stereo setup vs the Asus Xtion camera was looking promising (stereo image on top, Xtion image on bottom, left camera 2D image inset):

In practice however, with this stereo setup as the data source for ROS SLAM mapping, there were issues. The depth data was quite noisy for some surface textures, and depth accuracy wasn’t very good beyond a few meters. Also, the SGBM algorithm tends to cause data omission for large texture-less surfaces.

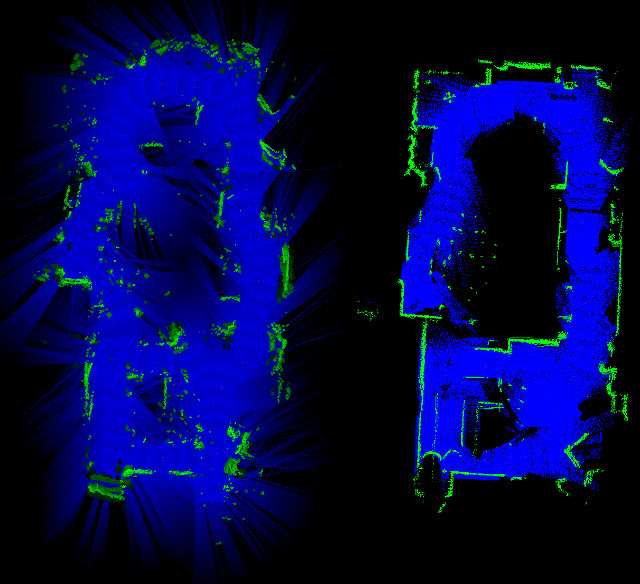

The image below shows a comparison of a plan view map of the same area, generated using the stereo setup on the left, and using the Asus Xtion depth camera on the right (using data from the horizontal plane only, and pure odometry to align the scans):

Stereo map on left, Xtion on right (click to enlarge)

The stereo scan noise occasionally projected a small false obstacle, that would wreak havoc with the ROS path-planner, and the inaccuracy or omission of distant features would cause weak localisation (and a lost robot).

Another problem was the slow speed of the system: the OpenCV Java SGBM processing, along with all the other robot functions fighting for CPU time, would only yield 2 frames per second (the prototype stereo-bot had an Atom N2800 CPU), demanding that navigating robot speed be slowed way down, to reduce errors.

In retrospect, an integrated stereo solution like the Zed camera, with some on-board processing and tightly-calibrated cameras, would have been much more effective (if money was no object).

In the end, we stuck with the Asus Xtion, dwinding supply and all, in the hope that the near future would deliver a new low cost depth sensor with stable supply.

Luckily the Orbbec Astra came along just in time.